This week I changed my process a little bit. I'm spending more time upfront planning, working on a project mindmap/process map, laying out my blog, and just trying to plan and define what I'm going to do up front before jumping into the lab. I associate Project Objectives as the what is planned to accomplish the project goal/deliverables. I'll be updating this Objective section as I jump each hurdle during this week's efforts. You guessed it, this week the theme is a track & field relay race.

This week I changed my process a little bit. I'm spending more time upfront planning, working on a project mindmap/process map, laying out my blog, and just trying to plan and define what I'm going to do up front before jumping into the lab. I associate Project Objectives as the what is planned to accomplish the project goal/deliverables. I'll be updating this Objective section as I jump each hurdle during this week's efforts. You guessed it, this week the theme is a track & field relay race.Objectives (What):

- Complete Analysis Lab

- Explore data in ArcMap

- Further, explore data in ERDAS IMAGINE

- Analyze Group 1 Landsat Image

- Step 1 Prepare your Landsat image for analysis - L2010_p17r33.img

- Use the Composite Bands tool to create a single raster dataset

- Use the Extract By Mask tool to clip to basin raster

- Step 2 Conduct Unsupervised Image Classification - clstr_2010_50_md.img

- Step 3 Reclassify imagery - Rclss_p17r33.img

- Step 4 Update MTR Story Map Journal

- Look ahead: Anticipate how to present/report on this week's endeavors and envision what some next steps might look like.

- Complete process summary

- Screenshot of Reclassified MTR raster

- Finalize this blog and Update MTR Story Map Journal Click Here

- https://pns.maps.arcgis.com/apps/MapJournal/index.html?appid=d020fe9fd64d490dba2f3c64b856e596

So what are we going to do with last week's accomplishments this week? Last weeks deliverables are not the subject of analysis this week. However, the output boundaries produced from last week could be used as a window to view this weeks image classification. This week we continued to look for signs of mountaintop removal using another type of image, Landsat image. The tools for performing Landsat image inspection are ERDAS Imagine and ArcMap 10.6.1. Recall that the overall study region was divided into multiple groups. I'm still a member of group one. The area that my group has been assigned is broken down into several Landsat rasters. I analyzed one raster, L2010_p17r33.img, as my portion of the assigned work while other members worked with another raster. Being mindful of visually determining evidence of mountaintop removal, image analysis via unsupervised image classification, creating a new cluster property (Class_Name), and assigning Class_Name a new value ("MTR" or "non-MTR") was the focus of this week. Below is a reminder of the study region I created from last week post.

So what actually happened during this weeks analysis phase? Well for starters, getting reacquainted with ERDAS Imagine was an arduous task this week. This feeling was reminiscent of last week went I was starting to use Idle to review the starter template script. After jumping this first hurdle (ERDAS) next was remembering past lessons learned from a remote sensing class regarding the classification of images. The next series of hurdles involved understanding the basic types of image classification: what's the difference between a supervised and unsupervised image classification? After some internet searching, I was quickly back on track at a high-level. Basically, unsupervised image classification is calculated/guided by a software clustering algorithm (no landscape training required) and supervised classification is human-guided image classification requiring landscape training by a human to help inform the computer algorithm to understand the landscape. Well, that's how much of this week went, remembering the past as I processed each step of this week's lab instructions.

Exploring this week's Classification/Reclassification endeavors

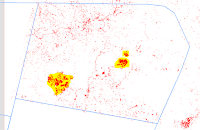

The image to the right depicts this week's deliverable outputs. My plan for this map was to show how last week's boundaries intersected this weeks analysis. So, I'm using color on top of a grayscale background to hopefully show the area of interest this week. The red bound extent area with white background depicts the Landsat image I investigated this week. Each pixel in this area was examined with ERDAS Image using an unsupervised classification process that sorted the pixels into fifty fixed clusters. Via lab instructions we set the number of clusters to fifty. This classification method examined all Landsat image pixels and based on each pixel’s spectral reflectance value, all the pixels were sorted into fifty different colored clusters. Then I examined the image in known areas of MTR and further defined these clusters with a custom property (Class_Name) and assigned a value of “MTR” and assigned a color of red. For all other Clusters, I set the value of Class_Name to “non-MTR” and assign no color. The Class_Name field will provide an easy way to query all pixels associated with MTR, which will be important later when we want to clip/mask the entire extent of the image to a smaller region, Group 1 Study Area. Note that the majority of my Landsat image, "P17R33", is outside the area of interest (AOI) of the Group 1 study area.

The image to the right depicts this week's deliverable outputs. My plan for this map was to show how last week's boundaries intersected this weeks analysis. So, I'm using color on top of a grayscale background to hopefully show the area of interest this week. The red bound extent area with white background depicts the Landsat image I investigated this week. Each pixel in this area was examined with ERDAS Image using an unsupervised classification process that sorted the pixels into fifty fixed clusters. Via lab instructions we set the number of clusters to fifty. This classification method examined all Landsat image pixels and based on each pixel’s spectral reflectance value, all the pixels were sorted into fifty different colored clusters. Then I examined the image in known areas of MTR and further defined these clusters with a custom property (Class_Name) and assigned a value of “MTR” and assigned a color of red. For all other Clusters, I set the value of Class_Name to “non-MTR” and assign no color. The Class_Name field will provide an easy way to query all pixels associated with MTR, which will be important later when we want to clip/mask the entire extent of the image to a smaller region, Group 1 Study Area. Note that the majority of my Landsat image, "P17R33", is outside the area of interest (AOI) of the Group 1 study area.When I zoomed in close to inspect known areas of MTR it was obvious by panning around that not all suspect clusters were valid MTR sites. Authentic MTR areas are a type of urbanization (big machines were used to modify the landscape). Hence, it’s important to remember that not all red locations classified as "MTR" are true MTR sites.

Let me repeat to be very clear, all red clusters are NOT valid locations of MTR.

Unsupervised classification does not include any type of landscape training so other types of urbanizations (roads, building, lot clearing) will obscure the identification of valid MTR clusters. And there will be other types of atmospheric inference (clouds, moisture, etc.) that will further complicate the identification since various types of interference will have similar spectral reflectance traits common to real MTR locations. So be mindful of urbanization and various types of interference when viewing this map. I don't want to mislead the map viewer to feel that all this red is associated with valid MTR mining. On the contrary, these red clusters are merely the results of unsupervised classification and this area are suspect locations of MTR that will require ground truthing to verify as true clusters of real MTR locations. To the left is a close look at my Group 1 region. Linear regions are easy to infer as roadways, but it’s difficult to truly identify legitimate MTR locations. I’ve highlighted two regions that are authentic MTR Clusters. Visit next week as we continue the search to discover evidence of MTR mining as the various types of interference is stripped away.

To the left is a close look at my Group 1 region. Linear regions are easy to infer as roadways, but it’s difficult to truly identify legitimate MTR locations. I’ve highlighted two regions that are authentic MTR Clusters. Visit next week as we continue the search to discover evidence of MTR mining as the various types of interference is stripped away.What was learned/remembered this week?

This week was definitely a flashback to the Remote Sensing course of yesteryear. Relearning the two common types of image classification was the remarkable hurdle this week.

What was fun and or challenging this week?

Grayscale maps still continue to be fun. They lend to making the area of interest take center stage. And the not so fun thing this week was making sense of unsupervised classification. It was tough getting started with ERDAS IMAGINE, but I managed to plow through it. I'm sure with more practice, this task would become fun someday!

What were some Weekly Positives?

Completing the Landsat image classification using unsupervised classification was definitely a positive. But a subtle positive awareness discovered this week was the observance of putting information into a system, then doing something with that input to produce an output. We see this basic model everywhere.

Visualizing the importance of input-process-output (IPO).

Visualizing the importance of input-process-output (IPO).This simple model is so subtle it is easily overlooked. But when you're looking for it, it's a common underlay in many technical and non-technical (economic) processes. Take for instance coal as in input for the steel industry and steel as in input for the coal industry. And possibly the car industry requiring both coal and steel as inputs to output cars. Here we see the goods of downstream industries producing inputs for further use in producing final goods (outputs). IPO is ubiquitous, it's used in many aspects of life if you look close enough. Its the basic model for processes to follow.

In Summary, the main objective this week was to create a reclassified raster using a combination of ERDAS Imagine and ArcMap to identify areas of suspected MTR. Now that we have completed two-thirds of this MTR Project, its time to take a look ahead for the final week, envisioning a final presentation that reports our MTR mining findings. Below is an outline of high-level objects for next week.

In Summary, the main objective this week was to create a reclassified raster using a combination of ERDAS Imagine and ArcMap to identify areas of suspected MTR. Now that we have completed two-thirds of this MTR Project, its time to take a look ahead for the final week, envisioning a final presentation that reports our MTR mining findings. Below is an outline of high-level objects for next week.- Convert MTR raster to polygons using Raster to Polygon (Conversion Tool)

- Remove MTR areas that are smaller than a given acreage.

- Create buffers around roads and rivers, removing MTR areas that fall within those buffers.

- Perform an accuracy test using random points on your map.

- Compare your 2010 MTR data with the 2005 dataset.

- Package your dataset for your group leader.

- Compile group data into a single dataset for group study area (group leader only).

- Create map service presenting your group findings with ArcGISOnline, UWF Organization.

My relay-race playlist looks like this.

And first pick was Cruise by FL GA Line:

https://www.youtube.com/watch?v=8PvebsWcpto

Off topic, another favorite of my by FL GA Line is Simple

https://www.youtube.com/watch?v=3GeaYy6zlXU

References:

• https://articles.extension.org/pages/40214/whats-the-difference-between-a-supervised-and-unsupervised-image-classification

• https://gisgeography.com/image-classification-techniques-remote-sensing/

• https://gisgeography.com/supervised-unsupervised-classification-arcgis/

• https://www.youtube.com/watch?v=8PvebsWcpto