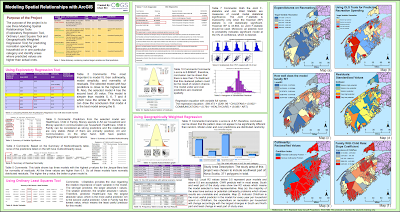

In general, the lecture material was hard for me to wrap my mind around all the moving parts associated with regression analysis. We learned about three types of regression analysis: explanatory, Geographically Weighted Regression (GWR), and Ordinary Least Squares (OLS). I found a nice project online that compared these three type of regression that helped me out this week. The project is called "Modeling Spatial Relationships with ArcGIS" and it was created by Chen Shi.

What I discovered was that regardless of the type of regression, they all have in common a core concept: to examine the influence of one or more independent (socioeconomic factors) variables on a dependent (Meth Lab Density) variable. I choose to think of regression as a line of best fit, which is a line called Y-hat. In statistics, there is a term called Line-hat, which refers to predictions of true values. Hence, a prediction of Y for a given value of x equates to an expression describing the best fit line through some observed/actual values. Below is an example of a regression line:

Y-hat = y-intercept + coefficient(slope) of x

The above equation may look familiar. I'm referring to the point-slope equation of a line often taught in algebra: y = mx + b (The two bivariant variables are x (dependent variable) and y (independent variable), m is the slope (coefficient), and b is the y-intercept). But the point-slope equation is missing a variable referred to as the error portion of the dependent variable that isn't explained by the model, which is the difference between the actual and predicted values. The missing variable is called residuals. Below is a vocabulary list to help explain the equation that forms the model being built by the regression method and what we learned these past two weeks:

- Dependent variable (Y): what we are trying to model or predict (Meth Lab Density)

- Explanatory variables (X): variables we believe influence or help explain the dependent variable (e.g., population, education, gender, income, etc.)

- Coefficients (ß): values reflecting the relationship and strength of each explanatory variable to the dependent variable.

- Residuals (ε): the portion of the dependent variable that is not explained by the model (the model under and over-predictions).

Using the variables above, a simple and more informative regression equation would look like the following: Y = ßX + ε. In actuality though, the equation is more complicated and it more resembles the graphic below. It's the gist of what we explored this week.

During the Lab portion of this project, I learned how to leverage ArcMap's Spatial Analyst extension to reveal Spatial Statistics Tools and Toolsets to perform regression analysis. The lab was tedious to perform; but it allowed me to run the OLS tool which showed me how to model, examine, and explore spatial relationships, to better understand the socioeconomic factors (age, income, sex, etc) behind observed spatial patterns that explained the location of 176 known meth lab seizures. It was a mentally painful experience to really try and understand all the statistics going on in this lab. And no real class involvement made for a poor learning experience.

The end Goal (Why) for this Project phase:

- Understand the core concept of regression analysis

- Learn about three types of regression, Exploratory, GWR, and OLS

- Explore how to use OLS to limit 29 variable candidates to make predictions

The Objectives (What) were as follows:

- Perform and explore the method of Ordinary Least Squares to limit 29 variable candidates to make predictions

- Write Methods & Results sections of a final report paper

- Create a map showing StdResidual results from OLS model

- Continue to learn about linear regression and establish a better understanding of this predictive type of analysis

What was learned during the last two weeks?

- Y-hat (

) = is a symbol that represents the predicted equation for a line of best fit in linear regression.

) = is a symbol that represents the predicted equation for a line of best fit in linear regression. - The equation takes the form:

= a + bx, where b is the slope and a is the y-intercept.

= a + bx, where b is the slope and a is the y-intercept. - Y-bar = the mean(avg) value of Y (dependent variable)

- SSR = Σ(y-hat - y-bar)² (explained deviation)

- SSE = Σ()² (unexplained deviation)

- Limiting 29 candidate factors to 7 was painful!

What was challenging during the last two weeks?

My challenge this week was discovering how to limit 29 variable candidates. More specifically, identifying my clues to interpreting the OLS results was probably the biggest issue. Deciding which explanatory variable (EV) is to stay or be removed drove me crazy at first. This part of the lab was demanding for me to visualize and explain. The basic premise was easy: to show how known Meth Labs busts are dependent on some limited selection of explanatory socioeconomic factors such as the 2010 population per square mile, the Median age of males, percentage of whites, a percent of uneducated, etc.. But trying to understand the statistics behind the complex relationships involved in the regression analysis was painful for me.

What happened during the last two weeks?

The bulk of this weeks lab exercise involved exploring several checks through the use of the OLS regression tool that analyzed how 29 independent/explanatory US Census variables explained a dependent variable, Meth Lab Seizures. The aim was to select the best 5 to 10 independent variables that explained the location of 176 known Meth Lab seizures/busts by running the OLS tool and evaluating the regression tool's OLS Summary and Diagnostic results via the guidance of the six checks, which are questions. How well these questions were answered was the tricky part for me this week. I removed 22 of the original 29 independent variables. Below are the 7 best explanatory variables I selected using the six checks.

The lab combined checks 1-3 into one task that resulted in either a removing or leaving an explanatory variable based on if it helped or hurt the relationship of explaining the dependent (Meth Lab Density) variable. Below are snap-shots of working through each of the 6 questions. The order of answering these six questions is very important!

Question 1 - Are independent variables helping or hurting my model?

This task involved checking to see that all of the explanatory variables have statistically significant coefficients (value > 0.4). Two columns, Probability, and Robust Probability measure coefficient statistical significance. An asterisk next to the probability tells you the coefficient is significant. If a variable is not significant, it is not helping the model, and unless I thought the particular variable is critical, I removed it. When the Koenker (BP) statistic is statistically significant, you can only trust the Robust Probability column to determine if a coefficient is significant or not. Small probabilities are “better” (more significant) than large probabilities.Question 2 - Is the relationship between the independent and dependent variables what I expected?

This task involved checking to see that each coefficient value has the “expected” sign and not indicating a slope of zero. A positive coefficient indicates the relationship is positive; a negative coefficient means the relationship is negative. In the beginning, I noticed lots of high negative and positive values which I used to weigh my decision to keep the variable. I used lower values as my clue to remove the variable. And when considering the variable, I would ask myself, does this value seem reasonable for this variable to either increase or decrease the MLD.

Question 3 - Are there redundant explanatory variables?

This task involved checking for redundancy among the explanatory variables. If the VIF value (variance inflation factor) for any of your variables is larger than about 7.5 (smaller is definitely better), it means that one or more variables are telling the same story. This leads to an over-count type of bias. I used large VIF values to weigh my decision to remove the variable.

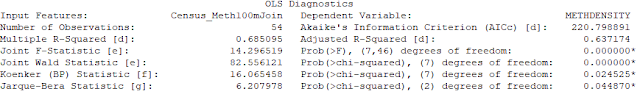

Questions 4 - 6 involved OLS result values seen in the OLS Diagnostic portion of the report shown below.

Question 4 - Is my model biased?

This task involved checking the Jarque-Bera Statistic is NOT statistically significant. The residuals (over/under predictions) from a properly specified model will reflect random noise. Random noise has a random spatial pattern (no clustering of over/under predictions). It also has a normal histogram if you plotted the residuals. The Jarque-Bera check measures whether or not the residuals from a regression model are normally distributed (think Bell Curve). This is the one test you do NOT want to be statistically significant! When it IS statistically significant, your model is biased. This often means you are missing one or more key explanatory variables.

Question 5 - Have I found all the key explanatory variables?

This task involved checking the standard map output of running the OLS tool. It's a map of the regression residuals representing model over and underpredictions. Red areas indicate that actual observed values are higher than the values predicted by the model. Blue areas show where actual values are lower than the model predicted. Statistically significant spatial autocorrelation in your model residuals indicates that you are missing one or more key explanatory variables.

Question 6 - How well am I explaining my dependent variable?

This task involved checking model performance by using the adjusted R squared value as an indicator of how much variation in your dependent variable has been explained by the model. the adjusted R squared value ranges from 0 to 1.0 and higher values are a positive indicator of performance. I watched this value increase from -6.019018 at the beginning to a value of 0.367174 at the end.

The AIC value can also be used to measure model performance. When considering AIC values, the lower the value is a gauge for a better performing model.

Each time I would remove or re-add a variable I would reiterate through the six checks above to determine if the model got better. This is where lots of patience is required! ArcMap Help has an "Interpreting OLS results" page that was very helpful.

Additional Consideration - Use GWR to improve the model

When the Koeker test is statistically significant, as it is in my model, it indicates relationships between some or all of your explanatory variables and your dependent variable are non-stationary. This means, for example, that the population variable might be an important predictor of Meth Lab Density in some locations of your study, but perhaps a weak predictor in other locations. Whenever the Koenker test is statistically significant, it indicates you will likely improve model results by using another statistical method called Geographically Weighted Regression (GWR).

The good news is that once you’ve found your key explanatory variables using OLS, running GWR is actually pretty easy. In most cases, GWR will use the same dependent and explanatory variables you used in running the OLS tool.

What's the Conclusion?

In statistic, standardized residuals (SRs) is the method of normalizing the dataset. A standardized residual (SR) is a ratio: The difference between the observed Meth Lab Density (MLD) and the expected MLD. Below is the SR equation to help visualize and explain its definition.

But what does SRs Mean? The SR is a measure of the strength of the difference between observed and expected MLD values. After running the OLS tool, it automatically generates a residuals map that I would often review to quickly see if the selected variables helped or hurt the model (the more yellow the better). In addition, the structure of the map was also helpful in analysing the results of running OLS. A good model would show a dispersed layout of over and underpredictions. Looking at the legend of the map below, the orange to red range represents over prediction, meaning the model equation predicts more MLD than actual. The gray to blue range represents under prediction, meaning the model equation predicts there is less MLD than actual. There may be a little clumping shown in the map below, but the SR layout/structure is mainly dispersed across the study area, which indicates a good model. Could it be better? Absolutely!! Actually, as I looked at this map, I realized that the handful of observations outside the two main counties (Putnam and Kanawha) could have been the outliers that prevented my model to score higher. I really wish I noticed this earlier. I should have tried running my model on just observations made in Putnam and Kanawha counties. Then maybe my model would have been closer to 1.0.

[ SR = (observed MLD - expected MLD) / √ expected MLD]

But what does SRs Mean? The SR is a measure of the strength of the difference between observed and expected MLD values. After running the OLS tool, it automatically generates a residuals map that I would often review to quickly see if the selected variables helped or hurt the model (the more yellow the better). In addition, the structure of the map was also helpful in analysing the results of running OLS. A good model would show a dispersed layout of over and underpredictions. Looking at the legend of the map below, the orange to red range represents over prediction, meaning the model equation predicts more MLD than actual. The gray to blue range represents under prediction, meaning the model equation predicts there is less MLD than actual. There may be a little clumping shown in the map below, but the SR layout/structure is mainly dispersed across the study area, which indicates a good model. Could it be better? Absolutely!! Actually, as I looked at this map, I realized that the handful of observations outside the two main counties (Putnam and Kanawha) could have been the outliers that prevented my model to score higher. I really wish I noticed this earlier. I should have tried running my model on just observations made in Putnam and Kanawha counties. Then maybe my model would have been closer to 1.0.

In summary, this project demonstrated how to better understand some of the factors contributing to the spread of Meth Labs in a few West Virginia counties, by using Ordinary Least Squares (OLS) regression to limit the 29 candidate factors to a subset of 7 factors. The scatterplot matrix tool was used to improve the model by exploring the histograms of candidate explanatory variables that might improve the model. I also noted the Koenker test was statistically significant meaning a switch to using the GWR could result in an improved regression model. When executed successfully, regression analysis could provide a community with a number of important insights to help uncover more meth labs.

- ZedStatistics, https://www.youtube.com/watch?v=aq8VU5KLmkY&t=558s

- MathBits, https://mathbits.com/MathBits/TISection/Statistics1/LineFit.htm

- Interpreting OLS results, http://desktop.arcgis.com/en/arcmap/latest/tools/spatial-statistics-toolbox/interpreting-ols-results.htm

- AI & Machine Learning, https://www.youtube.com/watch?v=KCkGif6wSMo

No comments:

Post a Comment