Understanding the big picture of Damage Assessment

Damage Assessment (DA) is a broad term that consists of multiple components and is itself a member of a much larger domain called Emergency Management (EM). And as you might imagine, there is an Emergency Management Institute (EMI) to cover all aspects of Incident Management, which is also known as the National Incident Management System (NIMS). Whether or not your EM business has a response and recovery team to perform pre-landfall, DA-data collection as well as post-hurricane functions, it's important to understand the big picture of DA and how it is connected with decision-making and communication.NIMS provides a framework to establish a good communication plan as well as an Emergency Operations Center (EOC). No sense in reinventing anything associated under the umbrella of EM, especially when it comes to communication. Incident Management is an ongoing project for the Federal Emergency Management Agency (FEMA) with ongoing improvements based on years of lessons learned.

Keeping the End-in-Mind

Typically, the concept of an EOC is the central hub through which local DA results are funneled up the chain of command to inform the State Governor of DA efforts. Reporting an estimated dollar amount of damage impacted by an incident is the immediate (36-72hrs after landfall) goal of DA.Remote Data Collection

This weeks lab effort did not involve a boots-on-the-ground assignment. Instead, the lab assignment was similar to crowdsourcing for rapid damage assessment (rDA) assignment, where all students evaluated and reported results on the same study area in Tom's River Township, New Jersey.Prior to performing rDA, there could have been some pre-disaster planning tasks to generate several inundation layers to help identify flood-prone inspection zones as performed in Module 5.

Regarding this module, pre-disaster tasks centered around collecting data necessary to perform rDA.

The primary layers for this analysis were pre and post-high-resolution imagery, parcels, and study area. Some other nice to have layers would have been a building layer, address layer, mobile home layer, Assisted Living Facility layer, and shelter layer.

I quickly generated a building point layer by using the Feature to Point geoprocessing tool to copy off the parcel centroid geometry, which I repositioned over rooftops as I reviewed the pre-high-resolution imagery. Then I swiped back and forth between pre and post-high-resolution imagery to estimate the level of damage based on four of FEMAs five degrees of damage criteria: Destroyed, Major, Minor, Affected, Inaccessible. Then I used FEMAs preliminary damage assessment field guide to remind myself of what the five degrees of damage meant. Then I did my best to perform a remote initial damage assessment of the study area.

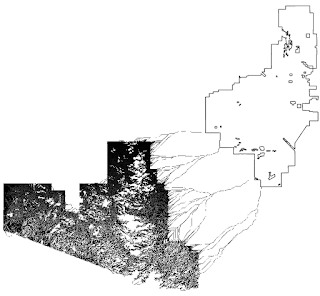

Below is a map I created from this lab assignment.

Next Steps

Below are some potential next steps to help assess the monetary impact of the incident- Windshield survey/inspections in response to citizen reports and remote rapid DA results

- Field Data Collection (Boots on the Ground)

- QA/QC DA results

- Report DA Results to EOC (After-Action Report)

The monetary value is typically provided by the county property appraisers office. For this lab, there was no such data to report or configure to provide the decision making information.

New Storymap

Below is a link to an ArcGIS Storymap about conducting rDA on Superstorm Sandy

https://storymaps.arcgis.com/stories/47e462e159b54bda824a1ddbd4bf6faa

In Summary

Damage Assessment was described in the context of Incident Management and decision making.

I used the parcel layer to establish the target for DA, by using the Feature to Point geoprocessing function. I placed DA points over rooftop structures as I reviewed the pre-event imagery. Using both the pre and post-event imagery, I performed a remote rapid damage assessment of public and private structures based on my observations.

Some nice to have layers might be a drone imagery layer to assist with assessing inundation, a trained model to access rooftop damage for assessing structural damage, major damage, and various other layers to assist with other types of mapping and analysis. Some examples of those other layers are a building layer, address layer, mobile home layer, Assisted Living Facility layer, and shelter layer.